The Fair way to do marketing

Advertising in the Web3 Era

The Fair way

to do marketing

in the “Privacy Age”.

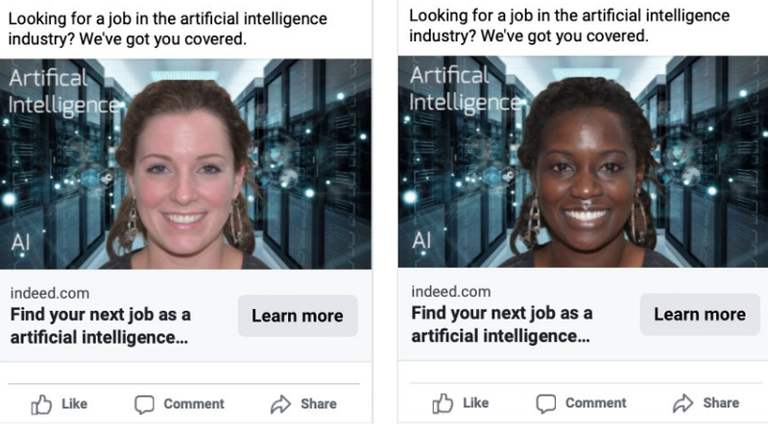

Facebook Segments Ads by Race and Age Based on Photos Whether Advertisers Want It or Not, Study Says

The ad on the left was delivered to 56% white users. The ad on the right was delivered to only 29% white users. Both ran at the same time, with the same budget and the same targeting parameters.

Facebook’s promise to advertisers is that its system is smart, effective, and easy to use. You upload your ads, fill out a few details, and Facebook’s algorithm does its magic, wading through millions of people to find the perfect audience.

The inner workings of that algorithm are opaque, even to people who work at Meta, Facebook’s parent company. But outside research sometimes offers a glimpse. A new study published Tuesday in the Association for Computer Machinery’s Digital Library journal finds that Facebook uses image recognition software to classify the race, gender, and age of the people pictured in advertisements, and that determination plays a huge role in who sees the ads. Researchers found that more ads with young women get shown to men over 55; that women see more ads with children; and that Black people see more ads with Black people in them.

In the study, the researchers created ads for job listings with pictures of people. In some ads they used stock photos, but in others they used AI to generate synthetic pictures that were identical aside from the demographics of the people in the images. Then, the researchers spent tens of thousands of dollars running the ads on Facebook, keeping track of which ads got shown to which users.

The results were dramatic. On average, the audience that saw the synthetic photos of Black people was 81% Black. But when it was a photo of a white person, the average audience was only 50% Black. The audience that saw photos of teenage girls was 57% male. Photos of older women went to an audience that was 58% women.

The study also found that the stock images performed identically to the pictures of artificial faces, which demonstrates that it’s just demographics, not other factors, which determines the outcome.

Assuming Facebook targeting is effective, this may not be problematic when you’re considering ads for products. But “when we’re talking about advertising for opportunities like jobs, housing, credit, even education, we can see that the things that might have worked quite well for selling products can lead to societally problematic outcomes,” said Piotr Sapiezynski, a researcher at Northeastern University, who co-authored the study.

In response to a request for comment, Meta said the research highlights an industry-wide concern.“We are building technology designed to help address these issues,” said Ashley Settle, a Meta spokesperson. “We’ve made significant efforts to prevent discrimination on our ads platform, and will continue to engage key civil rights groups, academics, and regulators on this work.”

Related video: Post allows you to regain friends and block ads on Facebook?

Facebook’s ad targeting by race and age may not be in advertisers’ best interests either. Companies often choose the people in their ads to demonstrate that they value diversity. They don’t want fewer white people to see their ads just because they chose a picture of a Black person. Even if Facebook knows older men are more likely to look at ads depicting young women, that doesn’t mean they’re more interested in the products. But there are far bigger consequences at play.

“Machine learning, deep learning, all of these technologies are conservative in principle,” Sapiezynski says. He added that systems like Facebook’s optimize systems by looking at what’s worked in the past, and assume that’s how things should look in the future. If algorithms are using crude demographic assumptions to decide who sees ads for housing, jobs, or other opportunities, that can reinforce stereotypes and enshrine discrimination.

That’s already happened on Facebook’s platform. A 2016 ProPublica investigation found Facebook let marketers hide ads for housing from Black people and other protected groups in violation of the Fair Housing Act. After the Department of Justice stepped in, Facebook stopped letting advertisers target ads based on race, religion, and certain other factors.

But even if advertisers can’t explicitly tell Facebook to discriminate, the study found that the Facebook algorithm might be doing it based on the pictures they put in their ads anyway. That’s a problem if regulators want to force a change.

Settle, the Meta spokesperson, said that Meta has invested in new technology to address its housing discrimination problem and that the company will extend those solutions to ads related to credit and jobs. The company will have more to share in the coming months, she added.

Photos of faces from the study.

© Screenshot: Thomas Germain

The researchers created nearly identical images to prove demographics were the deciding factor.

You could look at these results and think, “so what?” Facebook doesn’t publish the data, but maybe ads with pictures of Black people perform worse with white audiences. Sapiezynski said even if that’s true, it’s not a reasonable justification.

In the past, newspapers separated job listings by race and gender. Theoretically, that’s efficient if the people doing the hiring were prejudiced. “Maybe this was effective, at the time, but we decided that this is not the right way to approach this,” Sapiezynski said.

But we don’t have even enough data to prove Facebook’s methods are effective. The research may demonstrate that the platforms ad system isn’t as sophisticated as they want you to think. “There isn’t really a deeper understanding of what the ad is actually for. They look at the image, and they create a stereotype of how people behaved previously,” Sapiezynski said. “There is no meaning to it, just crude associations. So these are the examples, I think, that show that the system is not actually doing what the advertiser wants.”

Sign up for Gizmodo’s Newsletter. For the latest news, Facebook, Twitter and Instagram.

source: https://www.msn.com